polyglot-tools-docs

History

History

In 2016 I was asked to help evaluate a large and ugly codebase for a client, working closely with my colleague at the time Ra-el Peters. The client had started a big-bang re-write of their legacy code, but that endeavour seemed to be stalling. We needed to quickly review both codebases and answer their key question:

Should they continue with the re-write, or revert to the legacy code and try a different approach?

I was familiar with Erik Dörnenberg's articles on Toxic code visualisation but sadly most of the tools he referenced seemed to be abandoned, and other tools we knew of were quite focused on particular languages - this codebase was a mix of old versions of C#, SQL stored procedures, hand-rolled JQuery+JavaScript, AngularJS, newer versions of C#, and probably others we weren't aware of. We didn't want to try to get SonarQube or similar installed and running in-depth metrics on this code - we wanted a quick way to get an overview, and drill down on the toxic parts.

I'd also read Adam Tornhill's "Your code as a crime scene" and was quite keen to use his code-maat tools - which had the huge advantage of not really needing language parsers to work. Adam pointed out that purely looking at lines-of-code might be enough - large files are correlated to complexity in many cases.

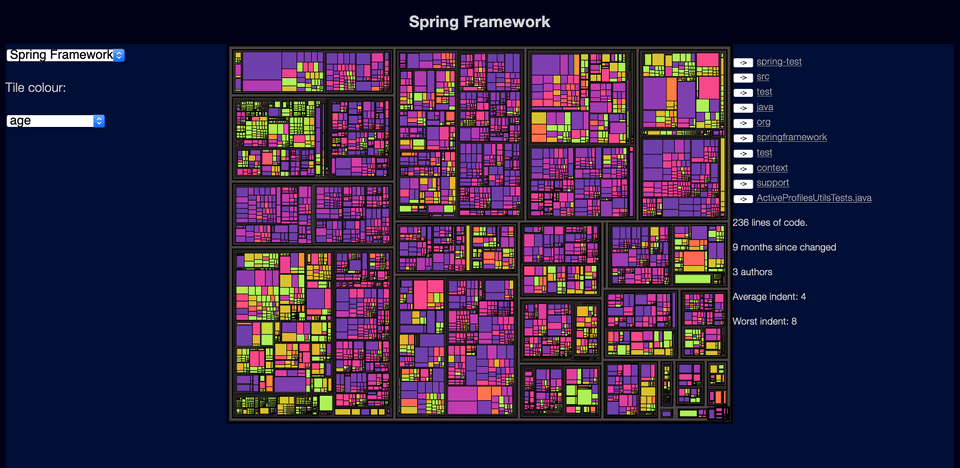

So Ra-el and I threw together some code, using cloc to count lines of code, code-maat to collect other metrics from source-control such as rate of change and number of authors, and D3 Treemaps to show file sizes.

I tinkered with this for a while - it basically worked, but stalled a bit. It was hard to extend beyond what code-maat offered - I added a module for indentation-based complexity, but both the scanning side and the visualisation were lacking - especially the visualisation, it was hard to change, and treemaps are just a bit ugly.

And that's where things sat from 2016 to 2018. I did a bit of packaging up, cleaning up, I talked about it at a few ThoughtWorks internal conferences, and at the UCL's The 59th CREST Open Workshop - Multi-language Software Analysis - and then didn't do much more. (I also had a lovely toddler who took up a lot of my tinkering time!)

(Note if you watch the UCL video - I have read a lot more about metrics and research since then, I'm not sure I agree with all the thoughts of my past self!)

In February 2019 I was working on a project where I had the chance to play with the rust programming language - and I really liked the look of it. Not only was it fast, with some very clever memory management and safety features, it also had a great community, and a great ecosystem. There were libraries for counting lines of code, there were libraries for processing git history - all the things I needed for my code scanning.

I then had a gap between projects, and thought it'd be fun to re-implement my clojure code in rust - both because I wanted to learn rust, but also because the clojure code was quite slow - and I could tell it was going to get slower and slower, the more I dug into other metrics.

So I started what is now the polyglot-code-scanner. It went remarkably smoothly - the existing rust libraries meant I could ditch code-maat and build everything in one rust program.

The UI was still pretty ugly though. I knew what I wanted - I'd done some reading, and found that Voronoi treemaps were probably the presentation I wanted - but they were a lot of work to get running. Also, my UI was hard to change - I was using just D3 and otherwise mostly raw javascript, and it was hard to make it interactive.

Later in 2019 I worked on a project with some react, however, and it was pretty obvious that what I wanted was a new react-based UI. I also found the d3-voronoi-treemap library, which appeared to give me an option of building the layout I wanted, in D3!

Unfortunately running the voronoi layout in real-time turned out to be too slow and buggy - but I worked out eventually that I could split the layout into a standalone program that ran in node.js, so speed was less critical.

So in 2019/2020 I slowly built the rest of the tools up. I also decided to do some reading into the research behind Adam's books, which led to a fascinating rabbit-hole of research papers - I've already improved some metrics, and added a first stab at temporal coupling - and there's a lot of room for more growth.

Edit this page on GitHub